If you haven't heard about Rush JS yet, you are probably not alone. In my opinion it's one of the hidden gems of the SPFx ecosystem or even the larger web dev ecosystem for that matter. It's a great open source tool which can be used to manage large repositories (monorepos) containing multiple node/npm projects with dependencies on each other. To know more about Rush, you can visit its website:

https://rushjs.io/

In this post, we are specifically going to look at how we can use Rush to manage SPFx repositories containing multiple solutions including SPFx library components, web parts and extensions.

With SPFx library components being introduced in preview in v1.8 and expected to be generally available in v1.9, sooner or later you might find yourself trying to manage large SPFx repositories like this:

(click to zoom)

In this repo, there are 4 SPFx solutions: The

org-app solution contains SPFx components which depend on

org-library which is an SPFx library component.

org-library itself depends on another SPFx library component

util-library. And finally, another "consumer" solution

org-app2 depends directly on

util-library

When working in this type of repository, things will get tricky to manage real fast because of the various SPFx solutions, their dependencies on each other, needing to run gulp build or gulp serve multiple times after changing a single file, remembering which consumer projects to update after the libraries have been changed, managing multiple node_modules folders (if not using pnpm) etc. This is where Rush comes in.

If all projects are part of one Rush repository:

- You don't have to manage node_modules folders individually for each project. Rush will maintain a common node_modules folder and create symlinks in each project which point to it.

- Rush will also create a single lock file for your entire repository and all projects.

- You don't have to use npm link to connect your library to consuming webpart packages. Rush handles all the linking and unlinking for you.

- When you are working on the library and the consumer components simultaneously, you don't have to run gulp build multiple times. You just need to run "rush build" once. More on this later.

- When working on multiple libraries and components with different dependencies, you don't have to keep track of which webpart depends on which library. Rush will do this for you and handle the necessary updates.

- Based on your project dependencies, Rush will parallelise builds of projects which do not depend on each other. Thus, reducing build time.

Now before you go converting all your existing repositories to Rush, there are a couple of considerations to think about as well:

- Most Rush commands only work with Git repositories. It uses git change tracking to determine which files changed and which repositories to update. So, if you are using TFVC or any other version control system, you might be out of luck. More details on this here: https://github.com/Microsoft/web-build-tools/issues/1250

- When working on SharePoint Framework projects in a Rush repository, the local workbench seems to be broken currently. The SPO workbench works. A bug is logged here: https://github.com/SharePoint/sp-dev-docs/issues/3736

Now with all that out of the way, lets create a simple Rush repository with just couple of SPFx components! What we are going to do is create a Rush repository, create an SPFx library component (corporate-library), then create an SPFx webpart component (corporate-apps) and then consume the library component from the webpart. We will then use Rush to build and manage this repository.

First, we need to perform some setup:

This should install rush, convert your root folder to a git repo and a rush repo, and then commit the rush files to git.

Next, we will create the SPFx library component:

Notice we are using the --skip-install flag as we don't want to install the npm packages right now. We will do this with rush later. Select the options as in the following image:

Add the SPFx library component to your git repo:

Next, we will create the SPFx webpart project:

We will again use the --skip-install flag to skip installing the npm packages.

Select the options as per the following image:

Now add the webpart project to your git repo:

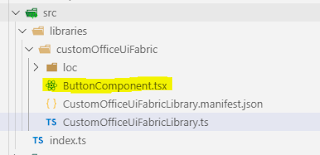

Your final solution structure should look like this:

Next, we need to tell Rush that the corporate-apps project has a dependency on the corporate-library project. We will do that by updating the dependencies in the corporate-apps/package.json file

After this, we need to configure Rush to work with our repository and project structure. For this, we need to update the rush.json file in the root folder.

We can define the node, npm and rush versions we will use for this repository. You can use other package managers like pnpm and yarn with rush as well. For a list of all available options see here:

https://rushjs.io/pages/configs/rush_json/

More importantly, we need to define our project folders and package names in this file. This is what Rush uses to find folders corresponding to package names.

By default, the file has a lot of config options and comments, but we only need these values for the demo:

Next, as we are going to use npm as the package manager, we need to delete the

common\config\rush\pnpmfile.js file as it is only meant to be used with pnpm.

After this, it is time to install all the npm packages needed by all the projects in our repository. For that we just need to run:

Rush will install all npm packages in the

common\temp\node_modules folder and create symlinks in each project to the common folder.

Next, we need to build our repository with:

Due to the dependencies added the

corporate-apps/package.json file, Rush will build the library project first and then the consumer project.

Now we can use the code from our library project in our webpart project. Go to the

corporate-apps\src\webparts\consumer\ConsumerWebPart.ts and add the following code:

If you run

rush build again, you will see that the library project is not updated. This is because rush knows that only the webpart files changed and it should only update the relevant projects. Here is a working demo of the repository:

(click to zoom)

And that's it! You can now build on this to start including multiple SPFx libraries and components to your repository.

Here is an image of a larger Rush repository in action (the same one mentioned at the start of this blog) You can see that depending on the files changes, Rush updates only the required projects.

Also, all your projects in the Rush repository are still standalone SPFx projects. You can navigate to each project's working directory and run

gulp build,

gulp bundle or

gulp package-solution just like you would in normal SPFx projects.

Hope you find this post helpful! Let me know in comments if you feel there is anything I have missed.

Links:

1) The simple Rush repository we built in this post:

https://github.com/vman/SPFxRushSimpleDemo

2) Slightly more complex Rush repository:

https://github.com/vman/SPFxRushComplexDemo

3) SPFx library component overview:

https://docs.microsoft.com/en-us/sharepoint/dev/spfx/library-component-overview

4) Rush website:

https://rushjs.io/